Should machines be permitted to take a human life?

This month marks 100 years since the beginning of World War One. For many working to advance humanitarian disarmament, it is a time to reflect on the changing nature of warfare over the past century and consider whether the dire situation faced by civilian victims of warfare is likely to get any better in the future. How will civilians be affected by the introduction of increasingly autonomous military technologies that place human soldiers ever further away from the field of operation and perhaps one day remove them altogether? What will war look like a hundred years from now – in 2114?

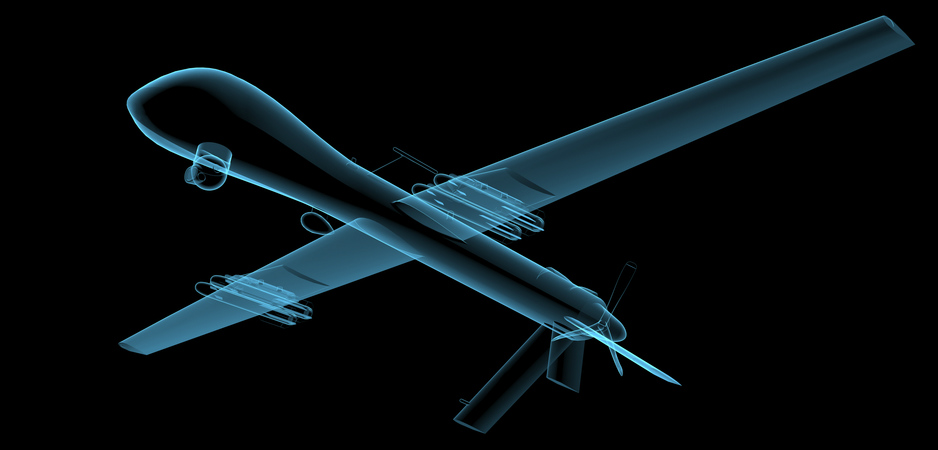

Lethal Autonomous Robots

Science fiction has already given us dozens of scenarios to select from. Read Kill Decision by Daniel Suarez to imagine a world in which military drones are fully autonomous and “programmed to find and kill their victim, and then to self-destruct.” Watch José Padilha’s “Robocop” to see what happens to domestic policing when fully autonomous weapons from the battlefield are introduced on city streets.

We can’t say these represent accurate depictions of future fighting, but several examples of robotic systems with various degrees of autonomy and lethality already exist. We see what that means with drones or remotely piloted aerial vehicles, which have a human in control from afar but still “in-the-loop.” A 2012 report by Human Rights Watch and 2013 report by a United Nations expert have identified several air, land, and sea-based precursors to fully autonomous weapons that are being developed, such as Samsung’s SGR-1 sentry robot, which South Korea has been placed on the Demilitarized Zone with North Korea. This stationary device is equipped with sensors that detect movement and – in accordance with its current “on-the-loop” setting –send a signal to a command center where human soldiers determine if the individual identified poses a threat and decide whether to fire the robot’s machine gun or grenade-launcher.

Large autonomous combat aircraft prototypes such as the X-47B (US) and Taranis (UK) are other examples of precursors that indicate the trend toward ever-greater autonomy in warfare, driven in part by a desire to travel ever-faster over ever-greater distances and the question of how to complete missions when communications are broken.

The degree of autonomy in current systems is seen by some as bringing greater accuracy and efficiency, but others want to go even further and pursue weapons systems that, once activated, would select and fire on targets on their own – without any meaningful human control. One roboticist contends that in the interest of “saving human lives,” scientists have a responsibility to “look for effective ways to reduce man’s inhumanity to man through technology,” through research and development into “ethical” military robotics. Georgia Tech’s Ron Arkin believes that, “The advent of [autonomous weapons] systems if done properly could possibly yield a greater adherence to the laws of war by robotic systems” than by human soldiers.

Limitations and Future Risks

On the opposing side are 272 engineers, computing and artificial intelligence experts, roboticists and scientists from 37 countries. They have co-signed a statement by the International Committee for Robot Arms Control (ICRAC) calling for a pre-emptive ban on development and deploymentof killer robots “given the limitations and unknown future risks of autonomous robot weapons technology” and because of their belief that “decisions about the application of violent force must not be delegated to machines.”

We do not want our society to get used to the idea of autonomous weapons deciding life and death.

These scientists question the notion that robotic weapons could meet legal requirements for the use of force “given the absence of clear scientific evidence that robot weapons have, or are likely to have in the foreseeable future, the functionality required for accurate target identification, situational awareness or decisions regarding the proportional use of force.”

This week, Clearpath Robotics joined that call when it issued a statement committing to “not manufacture weaponized robots that remove humans from the loop.” The Canadian company is believed to be the first commercial entity to make such a pledge and its co-founder explained the decision involved choosing “to value our ethics over potential future revenue.”

Taking Action to Address Killer Robots

In late 2012, ICRAC joined with Human Rights Watch and other non-governmental organizations to form the Campaign to Stop Killer Robots. Intheless than two years since, the matter of what to do about fully autonomous weapons has vaulted to the top of the global disarmament and human rights agenda.

The year 2013 was one of many “firsts” for those involved in efforts to address fully autonomous weapons. The Campaign to Stop Killer Robots held its first press conference in April to kick-off public outreach efforts. The United Nations’ first report and Human Rights Council debate on the matter of “lethal autonomous robots” came in May. Many governments spoke for the first time on killer robots at the UN General Assembly in October. And in November, nations at the Convention on Conventional Weapons (CCW) agreed by consensus to begin work in 2014 to “discuss the questions related to emerging technologies in the area of lethal autonomous weapons systems.”

Representatives from 87 nations, the International Committee of the Red Cross (ICRC), UN agencies, and the Campaign to Stop Killer Robots gathered at the UN in Geneva for the first “informal meeting of experts” on killer robots on May 13-16.

For many, the CCW venue holds promise as its members include all five permanent members of UN Security Council and “major players” working on the development of autonomous weapons, such as Israel and South Korea. The framework convention’s protocol banning blinding lasers also provides a pertinent precedent for a pre-emptive ban of a weapon before it was developed or used.

During the meeting a few countries delivered vague statements that appeared to seek to leave the door open for future technologies, but none vigorously defended or argued for fully autonomous weapons. Most nations that spoke highlighted the importance of maintaining meaningful human control over targeting and attack decisions. The US spoke about its 2012 Department of Defense policy directive that requires “appropriate” levels of human judgment over the full range of activities involved in the development and use of such weapons.

During the meeting, the ICRC observed that, “There is no clear line between automated and autonomous weapon systems.” It proposed that, “Rather than search for an unclear line, it may be more useful to focus on the critical functions of weapon systems” or “the process of target acquisition, tracking, selection, and attack.”

Countries at the meeting acknowledged that international humanitarian and human rights law applies to all new weapons, but they were divided on whether the weapons would be illegal under existing law or if their use would be permitted in certain circumstances. There was robust debate on the ability of existing international law to prevent the proliferation and use of fully autonomous weapons.

For these international deliberations to have any depth, all nations need to develop and articulate their policy on fully autonomous weapons in consultation with relevant actors, including nongovernmental experts.

At the CCW experts meeting, nations for the first time discussed ethical, moral and societal expectations on the question of human dignity and whether machines should be permitted to take a human life. Germany pointedly noted that what is socially acceptable evolves with the appearance of new technologies so that what was once considered unacceptable can become normal. But it also warned: “We do not want our society to get used to the idea of autonomous weapons deciding life and death.”

Other countries expressed concern that fully autonomous weapons could lower the threshold for the use of force. More than 20 Nobel Peace Prize laureates endorsed a joint statement that the Nobel Peace Laureate Jody Williams delivered to the meeting, expressing concern that “Leaving the killing to machines might make going to war easier and shift the burden of armed conflict onto civilians.” The laureates called for a ban on weapons that would be able to select and attack targets without meaningful human control.

The discussion of operational or military aspects relating to autonomous weapons saw many questions relating to cost, the adequacy of the military’s traditional command-and-control structure to manage the challenges the weapons could pose, and proliferation concerns.

Next Steps

Over all, this first multilateral meeting on killer robots was characterized by a positive atmosphere and high-degree of engagement by governments and nongovernmental groups alike, as dozens of nations provided detailed national statements, made comments, reacted, and asked questions.

While the meeting is widely viewed as a success, the one glaring exception was the lack of women experts in the line-up of 17 presenters. This has been attributed to a lack of input from CCW countries to the meeting’s chair even though many women are publishing and speaking on the topic. Thus this appears to be symptomatic of a broader problem of a lack of recognition of the contributions that women are making in this field.

Several initiatives have resulted from this concern, including a list of women who are speaking and writing about autonomous weapons and a list of men who have pledged not to accept invitations to speak on all-male humanitarian disarmament panels. Government and UN bodies need to recognize the need for diversity in deliberations relating to disarmament, peace, and security discussions, and recognize, solicit, and promote women’s expertise.

From the chair’s report of the experts meeting as well as the active participation and broad media coverage, it is clear that there is strong interest in continuing deliberations on the matter. But it is never possible to be certain of any decision made by consensus at the CCW. The weeks leading up to the November 14 decision by nations at the next CCW annual meeting will show if any will object to continuing this work at the CCW in 2015.

Until new international law is achieved, all countries should implement the recommendations of the 2013 and 2014 reports by the UN special rapporteur on extrajudicial, summary or arbitrary executions, including the call for an immediate moratorium on fully autonomous weapons.

For these international deliberations to have any depth, all nations need to develop and articulate their policy on fully autonomous weapons in consultation with relevant actors, including nongovernmental experts.

The swift response by governments, UN agencies, the ICRC, scientists, and activists to the challenges posed by fully autonomous weapons shows this is a serious matter deserving immediate attention. These actors will need to coordinate and collaborate if the tentative discussions that have started are to result in guarantees that humans will have meaningful control of autonomous weapons systems in the future.

The views expressed in this article are the author’s own and do not necessarily reflect Fair Observer’s editorial policy.

Support Fair Observer

We rely on your support for our independence, diversity and quality.

For more than 10 years, Fair Observer has been free, fair and independent. No billionaire owns us, no advertisers control us. We are a reader-supported nonprofit. Unlike many other publications, we keep our content free for readers regardless of where they live or whether they can afford to pay. We have no paywalls and no ads.

In the post-truth era of fake news, echo chambers and filter bubbles, we publish a plurality of perspectives from around the world. Anyone can publish with us, but everyone goes through a rigorous editorial process. So, you get fact-checked, well-reasoned content instead of noise.

We publish 2,500+ voices from 90+ countries. We also conduct education and training programs

on subjects ranging from digital media and journalism to writing and critical thinking. This

doesn’t come cheap. Servers, editors, trainers and web developers cost

money.

Please consider supporting us on a regular basis as a recurring donor or a

sustaining member.

Will you support FO’s journalism?

We rely on your support for our independence, diversity and quality.

Comment